My last two posts were about a hurricane and a colonscopy, so I thought it was time to write about some math again.

For the last five years Mattingly has worked on a problem with important political ramifications: what would a typical set of congressional districts (say the 13 districts in North Carolina) look like if they were chosen at “random” subject to the restrictions that they contain a roughly equal number of voters, are connected, and minimize the splitting of counties. The motivation for this question can be explained by looking at the current congressional districts in North Carolina. The tiny purple snake is district 12. It begins in Charlotte goes up I40 to Greensboro and then wiggles around to contain other nearby cities producing a district with a large percentage of Democrats.

To explain the key idea of gerrymandering, suppose, to keep the arithmetic simple, that a state has 2000 Democrats and 2000 Republicans. If there are four districts and we divide voters

District Republicans Democrats

1 600 400

2 600 400

3 600 400

4 200 800

then Republicans will win in 3 districts out of 4. The last solution extends easily to create 12 districts where the Republicans win 9. With a little more imagination and the help of a computer one can produce the outcome of the 2016 election in North Carolina election in which 10 Republicans and 3 Democrats were elected, despite the fact that the split between the parties is roughly 50-50.

The districts in the North Carolina map look odd, and the 7th district in Pennsylvania (named Goofy kicks Donald Duck) look ridiculous, but this is not proof of malice.

Mattingly with a group of postdocs, graduate students, and undergraduates has developed a statistical approach to this subject. To explain this we will consider a simple problem that can be analyzed using material taught in a basic probability or statistics class. A company has a machine that produces cans of tomatoes. On the average the can contains a pound of tomatoes (16 ounces), but the machine is not very precise, so the weight has a standard deviation (A statistical measure of the “typical deviation” from the mean) of 0.2 ounces. If we assume the weight of tomatoes follows the normal distribution then 68% of the time the weight will be between 15.8 and 16.2 ounces. To see if the machine is working properly an employee samples 16 cans and finds an average weight of 15.7 pounds.

To see if something is wrong we ask the question: if the machine was working properly then what is the probability that the average weight would be 15.7 pounds or less. The standard deviation of one observation is 0.2 but the standard deviation of the average of 16 observations is 0.2/(16)1/2 = 0.005. The observed average is 0.3 below the mean or 6 standard deviations. Consulting a table of the normal distribution or using a calculator we see that if the machine was working properly then the probability of an average of 15.7 or less would occur with probability less than 1/10,000.

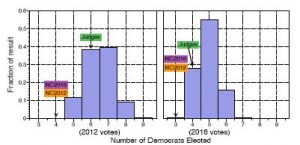

To approach the gerrymandering, we ask a similar question: if the districts were drawn without looking at party affiliation what is the probability that we would have 3 or fewer Democrats elected? This is a more complicated problem since one must generate a random sample from the collection of districts with the desired properties. To do this Mattingly’s team has developed methods to explore the space of possibilities and then making successive small changes in the maps. Using this approach one has make a large number of changes before you have a map that is `independent.” In a typical analysis they generate 24,000 maps. They found that using the randomly generated maps and retallying the votes, ≤3 Democrats were elected in fewer than 1% of the scenarios. The next graphic shows results for the 2012, 2016 maps and one drawn by judges.

Mattingly has also done analyses of congressional districts in Wisconsin and Pennsylvania, and has helped lawyers prepare briefs for cases challenging voting. His research has been cited in many decisions including the three judge panel who ruled in August 2018 that the NC congressional district were unconstitutional. For more details see the Quantifying Gerrymandering blog

https://sites.duke.edu/quantifyinggerrymandering/author/jonmduke-edu/

Articles about Mattingly’s work have appeared in

(June 26, 2018) Proceedings of the National Academy of Science 115 (2018), 6515–6517

(January 17, 2018) Nature 553 (2018), 250

(October 6, 2017) New York Times https://www.nytimes.com/2017/10/06/opinion/sunday/computers-gerrymandering-wisconsin.html

The last article is a good (or perhaps I should bad) example of what can happen when your work is written about in popular press. The article, written by Jorden Ellenberg is, to stay within the confines of polite conversation, simply awful. Here I will confine my attention to its two major sins.

- Ellenberg refers several times to the Duke team but never mentions them by name. I guess our not-so-humble narrator does not want to share the spotlight with the people who did the hard work. The three people who wrote the paper are Jonathan Mattingly, professor and chair of the department, Greg Herschlag, a postdoc, and Robert Ravier, one of our better grad students. The paper went from nothing to fully written in two weeks in order to get ready for the court case. However, thanks to a number of late nights they were able to present clear evidence of gerrymandering. It seems to me that they deserve to be mentioned in the article, and it should have mentioned that the paper was available on the arXiv, so people could see for themselves.

- The last sentence of the article says “There will be many cases, maybe most of them, where it’s impossible, no matter how much math you do, to tell the difference between innocuous decision making and a scheme – like Wisconsin’s – designed to protect one party from voters who might prefer the other.” OMG. With many anti-gerrymandering lawsuits being pursued across the country, why would a “prominent” mathematician write that in most cases math cannot be used to detect gerrymandering?