Overview

The Massive Internal System Traffic Research Analysis and Logging” (MISTRAL) project leverages and expands an internal network monitoring fabric and data collection points, and to create a privacy-preserving reference scientific security dataset (RSSD, the MISTRAL Dataset) and associated data pipeline and analysis techniques. Together we expect these approaches will aid the detection of abnormal or malicious activities impacting the identified science drivers and cyberinfrastructure in Duke data centers and research labs and provide a rich data source for cybersecurity researchers to extend those impacts more broadly via protected access to the full RSSD, or to a public access version.

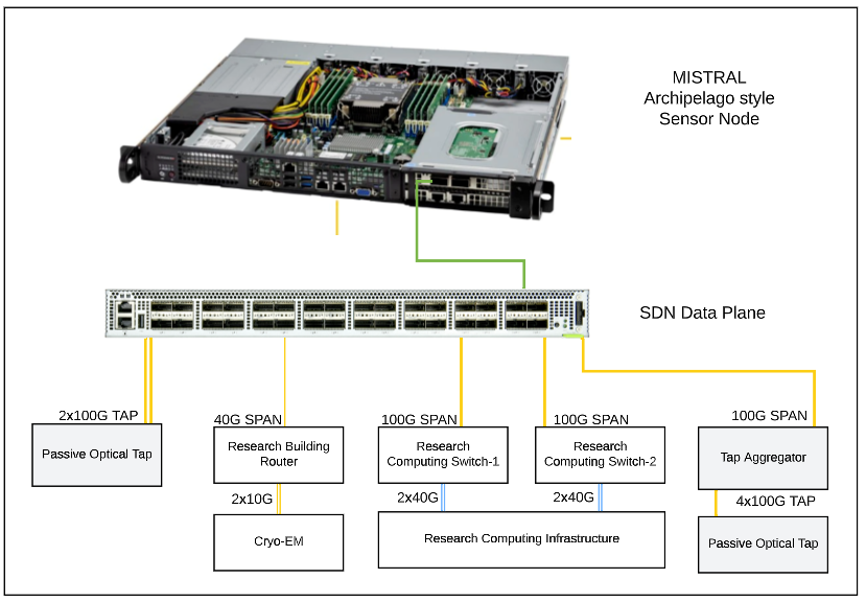

Duke developed and deployed a proof-of-concept prototype topology as illustrated in Fig. 1, below. The prototype architecture collects traffic data using passive optical taps and port-mirroring via SPAN (Switch Port ANalyzer) sessions on selected network infrastructure components.

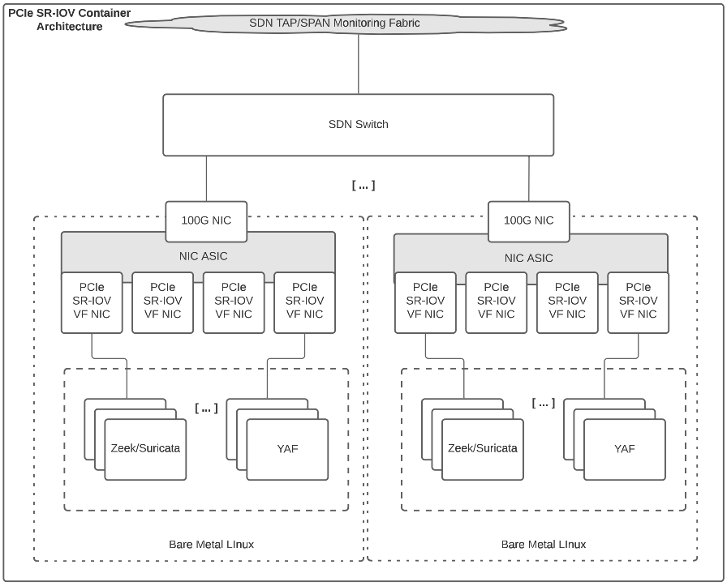

The SDN data plane and network appliance used as the MISTRAL sensor node build upon prior NSF-funded work as a part of Archipelago (OAC-1925550). As a part of this deployment, data ingested by the SDN data plane is symmetrically hashed so that both sides of a given conversation appear in the same output stream. A unique VLAN tag is then added to these output streams for delivery to sensor appliances that can be customized with personas for different use cases as described further here: https://sites.duke.edu/archipelago/files/2023/01/archipelago-final-appliance-evolution-v1.pdf. In this deployment scenario, a 100G Mellanox ConnectX-5 NIC is used with PCIe SR-IOV virtual functions enabled to place traffic associated with each unique VLAN tag into a dedicated virtual function within the NIC as illustrated in Fig. 2.

In the remainder of this post, we highlight some early return-on-investment use cases where the presence of the proof-of-concept prototype topology helped to lower time to resolution of several IT incidents.

Early ROI Use Cases

Within this section we present an overview of a variety of use cases that benefited from the deployment of the SDN driven monitoring fabric and MISTRAL even in prototype form. As the reader will be able to see from the examples, having the MISTRAL fabric in place has not only been advantageous in having network flow and packet data readily available, it has also been useful as a “just-in-time” service to collect data during an ongoing incident enabling the IT engineers to quickly identify and remediate the issue.

Cloudflare

On December 14, 2022, reports started arriving that certain sites on the Internet could not be reached by faculty, staff and students at Duke. A screenshot from the user perspective is shown in Fig. 3, below. After looking into the growing list of sites we realized that there was likely a common issue impacting multiple sites and needed to explore this further.

By utilizing the data sources presented to the MISTRAL prototype sensor node we were able to conduct packet captures and noted that DNS lookups to these sites were failing as the Cloudflare DNS servers were not responding as shown below:

dig cloudflare.net @ns3.cloudflare.com <timeout>

We also conducted a traceroute and noted that Cloudflare traffic was flowing towards midco.net via Internet2:

traceroute to ns3.cloudflare.com (162.159.0.33), 30 hops max, 60 byte packets

1 10.138.176.29 (10.138.176.29) 0.666 ms 10.138.176.30 (10.138.176.30) 0.715 ms 0.944 ms 2 tel1-p-po52.netcom.duke.edu (10.237.254.4) 0.544 ms wel1-p-po51.netcom.duke.edu (10.237.254.2) 0.389 ms wel1-p-po53.netcom.duke.edu (10.237.254.6) 0.493 ms 3 10.238.4.79 (10.238.4.79) 0.464 ms 10.238.4.75 (10.238.4.75) 0.540 ms 10.238.4.79 (10.238.4.79) 0.639 ms 4 10.235.255.1 (10.235.255.1) 1.921 ms 2.216 ms 1.871 ms 5 tel-edge-gw1-t0-0-0-1.netcom.duke.edu (10.236.254.102) 2.177 ms 1.816 ms 2.281 ms 6 ws-gw-to-duke.ncren.net (128.109.1.241) 6.380 ms 7.138 ms 5.760 ms 7 hntvl-gw-to-ws-gw.ncren.net (128.109.9.21) 7.464 ms 7.425 ms 7.027 ms 8 bundle-ether250.585.core1.char.net.internet2.edu (198.71.47.217) 12.672 ms 13.161 ms 12.376 ms 9 fourhundredge-0-0-0-0.4079.core1.rale.net.internet2.edu (163.253.2.83) 31.104 ms 31.307 ms 31.433 ms 10 fourhundredge-0-0-0-1.4079.core1.ashb.net.internet2.edu (163.253.2.84) 30.873 ms 30.483 ms 30.533 ms 11 fourhundredge-0-0-0-18.4079.core2.ashb.net.internet2.edu (163.253.1.107) 31.724 ms fourhundredge-0-0-0-17.4079.core2.ashb.net.internet2.edu (163.253.1.9) 30.434 ms fourhundredge-0-0-0-18.4079.core2.ashb.net.internet2.edu (163.253.1.107) 30.624 ms 12 fourhundredge-0-0-0-22.4079.core2.clev.net.internet2.edu (163.253.2.145) 30.155 ms 29.412 ms fourhundredge-0-0-0-1.4079.core2.clev.net.internet2.edu (163.253.1.139) 29.072 ms 13 fourhundredge-0-0-0-2.4079.core2.eqch.net.internet2.edu (163.253.2.17) 32.129 ms 30.859 ms 32.513 ms 14 fourhundredge-0-0-0-51.4079.agg2.eqch.net.internet2.edu (163.253.1.227) 29.562 ms fourhundredge-0-0-0-50.4079.agg2.eqch.net.internet2.edu (163.253.1.225) 31.372 ms fourhundredge-0-0-0-51.4079.agg2.eqch.net.internet2.edu (163.253.1.227) 30.556 ms 15 eqix-ch2.midcontinent.com (208.115.136.166) 29.960 ms 29.639 ms 29.720 ms 16 96-2-128-150-static.midco.net (96.2.128.150) 49.450 ms 48.697 ms 48.916 ms

We created a SmokePing probe (Figure 4) to perform a DNS lookup for cloudflare.com to monitor the situation and reached out to our regional network provider. In parallel we noted that other universities within North Carolina and were experiencing the same issue. We asked or ISP to escalate a ticket with Internet2 to explore the midco.net hop outlined in the above traceroute further.

While Internet2 was analyzing things, our ISP altered their routing tables to send traffic destined to Cloudflare out an ISP other than Internet2 and our SmokePing probe showed restored connectivity which was also confirmed via our MISTRAL packet capture.

The visibility provided by the monitoring fabric and MISTRAL sensor node were key in our ability to quickly diagnose and escalate the issue with our upstream ISP.

Session Border Controller (SBC) SIP/Proxy Issue

The Duke OIT telephony team identified an issue that required troubleshooting an issue with the connectivity between a campus external Session Border Controller (SBC) utilizing the Session Initiation Protocol (SIP) and a cloud-based service. The monitoring fabric deployed as a part of the MISTRAL proof-of-concept already included connectivity to data feeds from the campus firewall cluster. This existing connectivity allowed senior telephony engineers to conduct network packet captures from within a MISTRAL node to diagnose the SIP connectivity challenges between Duke and an external partner.

Syn Flood Analysis

A portion of infrastructure within Duke leveraging a proxy server came under stress due to a volume of TCP SYN connections. The prototype monitoring fabric outlined in Figure 1 helped technical teams to conduct packet captures to explore the source of the TCP SYN connections and to investigate the challenge further. Having the probes in place helped to reduce analysis time associated with investigating the issue.

Syslog Issues

A portion of infrastructure within the Duke IT environment was generating excessive syslog messages due to a what was ultimately identified as a hardware failure. The prototype MISTRAL monitoring fabric outlined in Figure 1 aided technical teams troubleshooting the issue to conduct network packet captures to investigate and ultimately identify the issue.

2.5 IPv6 Analysis

Network engineers identified connectivity concerns associated with IPv6 within a portion of the network infrastructure. The prototype monitoring fabric outlined in Figure 1 aided technical teams, allowing them to conduct network packet captures, lowering the time to investigate and remediate the issue. Having the probes in place helped to reduce analysis time needed.