By: Chad E Cook PT, PhD, FAPTA

Background:

Each year, in Duke University’s Division of Physical Therapy, I teach a class on research methodology. One of the topics we discuss in class involves ways to measure research impact among physical therapists’ (and other professions’) researchers. The discussion is complimentary to those that occur during the Appointment, Promotion and Tenure (AP&T) committee in the Department of Orthopaedics, of which I am a committee member. By definition, research impact metrics are quantitative tools used to assess the influence and productivity of researchers, to give some understanding who are leaders in their fields. Without fail, in the class (each year), there is some debate on the best methods. This blog will discuss four of the most common methods and will evaluate their advantages and disadvantages. The order presented does not imply superiority and these methods are not transferable with evaluating the impact of a single journal publication.

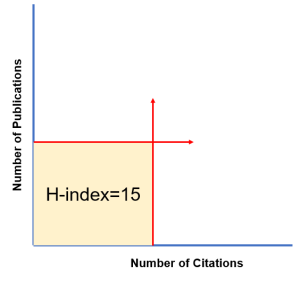

A researcher’s h-index can be found on platforms like Google Scholar, Web of Science, and Scopus, but the values often differ, since each platform uses different evaluation methods for determining a “citation”. In most cases, Google Scholar includes a wider range of publications, such as preprints and less traditional sources such as textbooks (which is why researchers often have much higher h-indexes on Google Scholar), whereas Web of Science and Scopus focus on more curated, peer-reviewed journals (no textbooks), creating discrepancies in the calculated h-index. Whereas an h-index may vary markedly across professionals, Hirsch suggested that an h-index of 3 to 5 can be set as standard for assistant professor, 8 to 12 for associate professor and h-index of 15 to 20 is a good standard for appointment to full professor [1].

Advantages:

- Balances productivity and impact.

- Simple and widely recognized.

- Useful for comparing researchers in the same field.

Disadvantages:

- Does not adjust for highly cited papers, beyond the core publications.

- Biased toward senior researchers with long careers.

- Ignores the contribution of co-authors or positioning of authorship.

- Field-dependent (e.g., higher citation rates in some fields).

M-Index (m-Quotient): Hirsch recognized the limitations of the h-index and its bias toward senior researchers who have had multiple years to acquire citations. He subsequently made an adjustment by taking the h-index and dividing it by the time (in years) since researcher’s initial publication. Thus, if a researcher has an H-index of 20, and their first publication occurred 25 years ago, their M-Index is 0.8 (20/25). Hirsch suggested that an M-index of 1.0 is Very good, 2.0 is Outstanding and 3.0 is Exceptional [1].

Advantages:

- Normalizes the h-index for career length [2].

- Useful for comparing early-career researchers.

Disadvantages:

- Still inherits many of the limitations of the h-index (e.g., less meaningful for researchers with short careers.

- It’s more difficult to understand than an h-index.

Field-Weighted Citation Impact (FWCI): Field-Weighted Citation Impact (FWCI) is a metric used to measure the citation impact of a researcher’s work compared to the expected citation rate in their specific field [3]. It is calculated by taking the total number of citations received by a researcher’s publications, and dividing the average number of citations that similar publications in the same field, publication type, and year that they are expected to receive. The FWCI is a ratio calculation, and includes a ratio of the actual citation count to the expected citation rate. For example, if a researcher’s publications have received 50 citations, but the expected citation rate for similar publications is 25, the FWCI would be 2.0; this means the researcher has been cited twice as much as expected. A FWCI of 1 indicates that the researcher has been cited exactly as expected, whereas a FWCI greater than 1 indicates higher-than-expected citation impact, and a FWCI less than 1 indicates lower-than-expected citation impact.

Advantages:

- Accounts for field-specific citation practices.

- Normalizes impact across disciplines.

Disadvantages:

- Requires access to field-specific data.

- Calculators are often for articles, not researchers.

- Less intuitive for non-specialists.

NIH Relative Citation Ratio (RCR): The National Institutes of Health (NIH) Relative Citation Rate (RCR) is a metric developed by the NIH Office of Portfolio Analysis to measure the scientific influence of a research paper [4]. RCR is first calculated by normalizing the citation rate of all papers to its field and publication years. The process involves estimating the citation rate of the researchers’ field using its co-citation network (also known as Field Weighted Citation Impact, see above). Secondly, the expected citation rate is calculated, by evaluating the rate for NIH-funded papers in the same field and publication years. The RCR compares the researcher’s papers’ citation rates to the expected citation rates. A researcher with an RCR of 1.0 has received citations at the same rate as the median NIH-funded researcher in its field. Values above 1.0 indicates that the researcher is cited as a rate above the median NIH-funded researchers. An RCR of 1.5, would mean the researcher is cited 1.5 times more frequently than the median NIH funded researcher. An RCR of 2.3 means they are cited 2.3 times more frequently, etc. Values below 1.0 suggest they are cited less frequently than the median researchers.

Advantages:

- Normalizes for field and time.

- Useful for comparing researchers within disciplines.

- Website is easy to navigate and is free https://icite.od.nih.gov/analysis

Disadvantages:

- The website is complicated and takes a little time to learn how to navigate.

- It is less known than other methods such as the h-index.

Summary: Each metric has its strengths and weaknesses, and no single metric can fully capture the impact of every single researcher. All methods push the importance of citations, although two (FWCI and RCR) compare these to others in similar fields. In our AP&T meetings, we consider a combination of metrics as the best approach, tailored to the specific context (e.g., field, career stage, or type of impact) and we also look at number of first author or senior author papers; there are researcher impact metrics that do this as well but they are less often used.

Disclaimer: Both Deepseek and Microsoft copilot were used to assist in this blog.

References

- Shah FA, Jawaid SA. The h-index: An Indicator of Research and Publication Output. Pak J Med Sci. 2023 Mar-Apr;39(2):315-316.

- Kurian C, Kurian E, Orhurhu V, Korn E, Salisu-Orhurhu M, Mueller A, Houle T, Shen S. Evaluating factors impacting National Institutes of Health funding in pain medicine. Reg Anesth Pain Med. 2025 Jan 7:rapm-2024-106132.

- Aggarwal M, Hutchison B, Katz A, Wong ST, Marshall EG, Slade S. Assessing the impact of Canadian primary care research and researchers: Citation analysis. Can Fam Physician. 2024 May;70(5):329-341.

- Vought V, Vought R, Herzog A, Mothy D, Shukla J, Crane AB, Khouri AS. Evaluating Research Activity and NIH-Funding Among Academic Ophthalmologists Using Relative Citation Ratio. Semin Ophthalmol. 2025 Jan;40(1):39-43.