Randomized Controlled Trials

In clinical research, treatment efficacy (the extent to which a specific intervention, such as a drug or therapy, produces a beneficial result under ideal conditions) and effectiveness (the degree to which an intervention achieves its intended outcomes in real-world settings) are studied using randomized controlled trials. Randomized controlled trials compare the average treatment effects (ATEs) of outcomes between two or more interventions [1]. By definition, an ATE represents the average difference in outcomes between treatment groups (those who receive the treatment or treatments) and/or a control group (those who do not receive the treatment) across the entire population. Less commonly, researchers will include a secondary “responder analyses” that looks at proportions of individuals who meet a clinically meaningful threshold.

A Potential Problem with Average Treatment Effects

Average treatment effect calculations are essential for policymakers, researchers, and practitioners to understand the effectiveness of interventions, policies, or treatments and inform decision-making in various fields. Simplistically, treatments that result in better ATEs are considered the most effective interventions and will find their way into clinical practice guidelines and recommendations.

However, conceptually, this is a problem for key reasons. Firstly, from the perspective of a practicing clinician, the majority of our ‘evidence’ comes from studies that look at averages of groups of patients. In contrast, in clinical practice, our decisions are applied to individual patients [1]. Sometimes group results from research studies don’t match the needs of the patient in front of us. Secondly, patient populations within a research study are heterogeneous in ways we don’t routinely measure. That is, they embody characteristics that vary between individuals, such as disease etiology and endotype, presence of comorbidities, or concomitant exposures, and these varying patient characteristics can potentially modify the effect of a treatment on outcomes [2]. And herein lies the problem of ATEs: they don’t give a strong perspective on the distribution of treatment effects and the fact that some patients do well with an efficacious treatment, whereas others do not [3].

The Individual Patient Conundrum

To help understand heterogeneous effects, it is common in analyzing experiments to look for differences in outcomes among sub-populations such as age, sex, or some other commonly captured data point. To date, subgrouping has only provided marginal benefit when understanding treatment effects. In reality, heterogeneous effects are likely more granular and may be influenced by factors we don’t routinely capture, such as biomarkers/disease mechanisms. Because of this, we should expect to see variability in treatment effectiveness, even when we keep treatment parameters consistent and even when dealing with individuals with similar diagnoses and/or similar staging [4].

A Hypothetical Exercise

Let’s do a hypothetical exercise using real-life data that has been purposively selected for a learning opportunity. A systematic review in 2022 found that the average sample size of a physical therapy-oriented clinical trial is 67 [5]. For this exercise, we’ve generated a sample of 67 individuals with chronic low back pain, who were pulled from clinical data of over 80,000 patients who received physical therapy treatment and completed a full cycle of care. We targeted patients who received either dominant exercise or dominant cognitive behavioral therapy (CBT) treatments, after analysis of billing codes. We assigned 33 patients to a dominant exercise group and 34 patients to a dominant cognitive behavioral therapy group. Purposively, we ensured that their baseline characteristics were similar (Table 1), as was their initial Oswestry Disability Index (ODI) score.

Table 1. Baseline Characteristics of the Generated Sample of 67 Subjects with Chronic Low Back Pain.

| Cognitive Behavioral Therapy Group (N=34) | Exercise Group (N=33) | P value | |

| Age | 44.2 (15.4) | 43.1 (15.6) | .77 |

| Sex | 17 (50%) =male | 15 (45%) =male | .71 |

| Oswestry Disability Index (percentage) | 41.39 (18.59) | 41.47 (16.48) | .99 |

| Education | 20 (59%) =college | 22 (61%) =college | .50 |

In our generated sample, the three-month outcomes were also similar across the two treatment groups (Table 2). Using linear modeling, our p value = .94, suggesting there is no statistically significant difference between the two interventions, even after controlling for baseline ODI.

Table 2. Three Month or Discharge Oswestry Disability Outcomes.

| Cognitive Behavioral Therapy Group (N=34) | Exercise Group (N=33) | P value | Effect Size (partial eta squared) | |

| Oswestry Disability Index (percentage) | 29.57 (19.6) | 29.29 (12.8) | .94 | .00 |

By definition, a correct way to report the findings would be “on average, after controlling for baseline values, there is no significant difference between the mean outcomes of the exercise group when compared to the mean outcomes of the CBT group”. An incorrect way of describing this which I’ve heard a hundred times is “exercise has no effect, or, CBT has no effect”. By interpreting the ATEs, we would assume that neither treatment was superior, and for the “average” patient we may recommend both for individuals with chronic low back pain as long as their results were better than a legitimate placebo or sham, were cost-effective, and did not include high levels of harms.

But what about individualized responses?

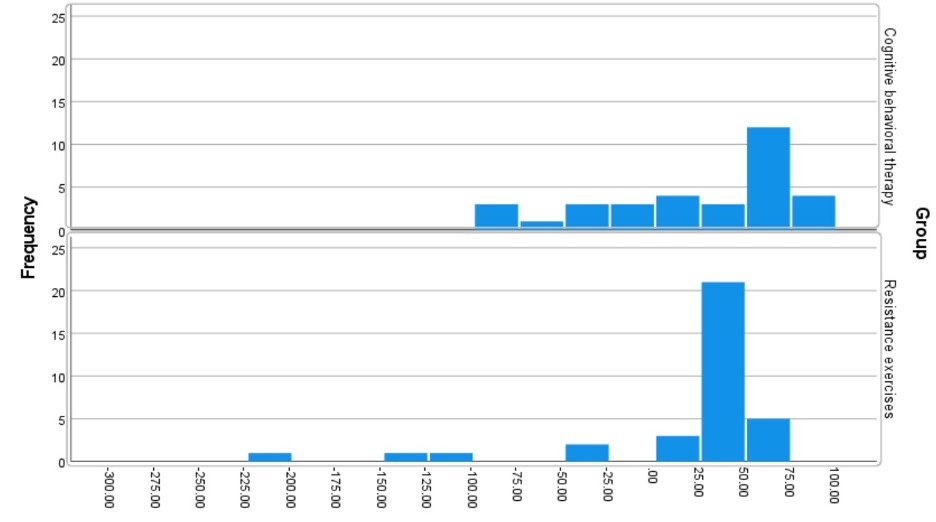

On observation (Table 2), we notice that the standard deviation at the 3-month ODI score is wider in the CBT group (19.6 versus 12.8). Visually it is clear that the percent change in ODI from baseline is different between the two groups (Figure 1). There seems to be a large number of individuals in the exercise group who yield a change at or higher than 30%.

Figure 1. Distribution of Percent Changes in CBT and Exercise Groups.

Whereas we can’t determine whether the ODI outcome or “treatment response” is specifically related to the treatment provided (if you are interested in this challenge, please review this paper [7], as it goes into detail about the fact we don’t ever really know if the treatment is the reason for the differences), we can indicate that patients in the CBT group had widely different (heterogeneous) outcomes despite receiving a similar treatment, whereas exercise seems to lead to a consistent response, with the exception of a few outliers. How different? Let’s do an exercise to explore the variability.

Let’s consider a widely used value of a 30% change in the ODI from baseline as a “least clinically meaningful difference” [8]. In addition, let’s consider the less commonly used value of 50% change in the ODI from baseline as a “substantially meaningful difference”. Interestingly, our exercise group has a statistically significant (p=.03) higher proportion of individuals who met the 30% change in the ODI from baseline (76%), when compared against CBT (52%). If we use a 30% change from baseline as our interpretative measure of success, we end up with results that are markedly different than ATE. We would advocate that exercise is the treatment of choice for chronic low back pain.

Table 3. Differences in Least Clinically Meaningful Differences with CBT versus Exercise.

| Cognitive Behavioral Therapy Group (N=34) | Exercise Group (N=33) | P value | |

| Oswestry Disability Index (percentage) |

17 (52%) =>30% 16 (48%) =<30% |

26 (76%) =>30% 8 (24%) =<30% |

.03 |

What about a 50% change?

But it gets even weirder. When evaluating a 50% change from baseline, CBT demonstrates a statistically significant higher proportion of individuals (48%) who met the 50% change in the ODI from baseline, when compared against exercise (15%). The results are completely the opposite of those found at 30% and again, very different from the ATEs reported earlier, which yielded no significant difference. If we use a 50% change from baseline, we would advocate the use of CBT as our treatment of choice for chronic low back pain.

Table 4. Differences in Substantially Meaningful Differences with CBT versus Exercise.

| Cognitive Behavioral Therapy Group (N=34) | Exercise Group (N=33) | P value | |

| Oswestry Disability Index (percentage) |

16 (48%) =>50% 17 (52%) =<50% |

5 (15%) =>50% 29 (85%) =<50% |

<.01 |

Different Boundaries Lead to Different Interpretations

In this single generated sample, we report three markedly different interpretations based on three different theoretical boundaries and methods of calculation. Although the data included did not include randomized individuals, the limitations in interpretations are similar regardless of the type of study [9]. The ATE applies only to the composite average of traits and characteristics of the heterogenous sample of subjects under study. Since each patient treated is a unique combination of traits and characteristics that include personality traits, genetics, life’s experiences, etc., the ATE has very little to do with exactly how each individual patient might respond to any given treatment; particularly treatments with less predictable biological effects such as CBT and exercise. This is a known limitation of using ATEs as it reflects group averages not the effect on individuals. In our generated dataset, ATE demonstrated no significant differences between groups.

Our second measure, a 30% change in baseline on the ODI, identified exercise as the significantly better intervention. Traditionally, the use of sub-groups that meet a “least clinically meaningful difference” is measured in a secondary analysis, often called a responder analysis. As we mentioned earlier, there are many limitations to this method, most notably the fact that responder analyses don’t actually measure who responded to the treatment; they mainly identify who improved (the treatment may not be the reason) [7]. Our third measure, a 50% change in baseline on the ODI, identified CBT as the significantly better intervention. It has the same limitations as using a 30% threshold.

This exercise emphasizes the importance of recognizing that individual patients respond differently to treatments leading to notable heterogenous treatment effects. This outlines the limitations of using both ATEs and responder thresholds, which reflect either group measures or assumes the treatment is the reason for the change, even within a randomized controlled trial. It also questions a “one size fits all” approach to treatment and supports the need for more precise methods of matching a person’s condition phenotype to a treatment mechanism.

References

- Kent DM. Overall average treatment effects from clinical trials, one-variable-at-a-time subgroup analyses and predictive approaches to heterogeneous treatment effects: Toward a more patient-centered evidence-based medicine. Clin Trials. 2023 Aug;20(4):328-337.

- Varadhan R, Seeger JD. Estimation and Reporting of Heterogeneity of Treatment Effects. In: Velentgas P, Dreyer NA, Nourjah P, et al., editors. Developing a Protocol for Observational Comparative Effectiveness Research: A User’s Guide. Rockville (MD): Agency for Healthcare Research and Quality (US); 2013 Jan. Chapter 3. Available from: https://www.ncbi.nlm.nih.gov/books/NBK126188/

- Edwards RR, Dworkin RH, Turk DC, Angst MS, Dionne R, Freeman R, Hansson P, Haroutounian S, Arendt-Nielsen L, Attal N, Baron R, Brell J, Bujanover S, Burke LB, Carr D, Chappell AS, Cowan P, Etropolski M, Fillingim RB, Gewandter JS, Katz NP, Kopecky EA, Markman JD, Nomikos G, Porter L, Rappaport BA, Rice ASC, Scavone JM, Scholz J, Simon LS, Smith SM, Tobias J, Tockarshewsky T, Veasley C, Versavel M, Wasan AD, Wen W, Yarnitsky D. Patient phenotyping in clinical trials of chronic pain treatments: IMMPACT recommendations. Pain. 2016 Sep;157(9):1851-1871.

- Alonso-Perez JL, Lopez-Lopez A, La Touche R, Lerma-Lara S, Suarez E, Rojas J, Bishop MD, Villafañe JH, Fernández-Carnero J. Hypoalgesic effects of three different manual therapy techniques on cervical spine and psychological interaction: A randomized clinical trial. J Bodyw Mov Ther. 2017 Oct;21(4):798-803.

- Verhagen A, Stubbs PW, Mehta P, Kennedy D, Nasser AM, Quel de Oliveira C, Pate JW, Skinner IW, McCambridge AB. Comparison between 2000 and 2018 on the reporting of statistical significance and clinical relevance in physiotherapy clinical trials in six major physiotherapy journals: a meta-research design. BMJ Open. 2022 Jan 3;12(1):e054875.

- Baguley T. Serious Stats: A guide to advanced statistics for the behavioral sciences. 2012. Palgrave: London.

- Cook CE, Bejarano G, Reneker J, Vigotsky AD, Riddle DL. Responder Analyses: A Methodological Mess. J Orthop Sports Phys Ther. 2023 Sep;0(9):1-3.

- Kelechi TJ, Dooley MJ, Mueller M, Madisetti M, Prentice MA. Symptoms Associated With Chronic Venous Disease in Response to a Cooling Treatment Compared to Placebo: A Randomized Clinical Trial. J Wound Ostomy Continence Nurs. 2018 Jul/Aug;45(4):301-309.

- Post RAJ, Petkovic M, van den Heuvel IL, van den Heuvel ER. Flexible Machine Learning Estimation of Conditional Average Treatment Effects: A Blessing and a Curse. Epidemiology. 2023 Oct 25. doi: 10.1097/EDE.0000000000001684.

The ATE attempts to create a single effect from the variable effects in the data collected by using a measure of central tendency. You must discuss both. The 95% CI and prediction interval (PI) of an effect is likely the most critical aspect in interpreting the clinical utility of an effect and is the least discussed. It needs to be addressed that the 95% CIs and PIs have a meaningful impact on determining if the effect is accurate enough to be clinically helpful. Most observed effects are not. There are several potential reasons for this heterogeneity related to the prospective validity of the study, the individual patient, how the intervention is provided, and the tool(s) used to measure the effects. If the variability of the compared effects overlaps in a research study or crosses zero in a synthesis of studies, the observed ATE is likely too variable to be considered a “trustworthy” effect. If the variability of the compared groups overlaps or the synthesized heterogeneity measured using prediction intervals crosses zero, the most honest interpretation of the ATE should be that the data is not reliable enough to draw any conclusions.

Please use this interpretation if helpful; please disregard it if not.