Scenario:

For RNAseq data, if one uses STAR for the alignment, it could present difficulty for GATK toolkit for the following reasons (https://sequencing.qcfail.com/articles/mapq-values-are-really-useful-but-their-implementation-is-a-mess/)

STAR uses a similar scoring scheme to Tophat except that the value for uniquely mapped reads is 255 instead of 50.

The mapping quality MAPQ (column 5) is 255 for uniquely mapping reads, and int(-10*log10(1-1/[number of loci the read maps to])) for multi-mapping reads. This scheme is same as the one used by Tophat…

In the BQSR steps, we will have this:

64162342 reads (100.00% of total) failing MappingQualityUnavailableFilter

Others (http://seqanswers.com/forums/showthread.php?t=21593) reported GTAK related challenge:

“255 actually in GATK’s CountCovariatesWalker is filtered out by MappingQualityUnavailableFilter in the top of walker:”

It will fail at the Mutect2 variant calling step.

From the SAM specification (http://samtools.github.io/hts-specs/SAMv1.pdf):

MAPQ: MAPping Quality. It equals −10 log10 Pr{mapping position is wrong}, rounded to the nearest integer. A value 255 indicates that the mapping quality is not available.

In the GATK legacy post (https://sites.google.com/a/broadinstitute.org/legacy-gatk-forum-discussions/2013-06-06-2013-02-12/2187-Can-I-bypass-the-MappingQualityUnavailableFilter-in-Unified-Genotyper)

Bismark sets MAPQ to 255 for all alignments.

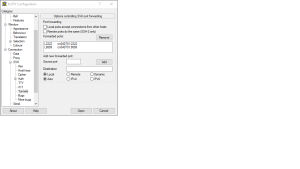

“ -rf ReassignMappingQuality -DMQ 60 ”

People have posted the same errors here at (https://github.com/bcbio/bcbio-nextgen/issues/2163) and propose the following work around

java -jar GenomeAnalysisTK.jar -T SplitNCigarReads -R ref.fasta -I dedupped.bam -o split.bam -rf ReassignOneMappingQuality -RMQF 255 -RMQT 60 -U ALLOW_N_CIGAR_READS

Solution:

Work around with STAR

“So what is needed I think is just adding –outSAMmapqUnique 60 in STAR CLI.”

Work around with GTAK

java -jar GenomeAnalysisTK.jar -T SplitNCigarReads -R ref.fasta -I dedupped.bam -o split.bam -rf ReassignOneMappingQuality -RMQF 255 -RMQT 60 -U ALLOW_N_CIGAR_READS

Alternatively, one can add the option “-T PrintReads -U ALLOW_N_CIGAR_READS” in the BQSR step to allow CIGAR string with “N”.

And, can turn off the filter with the option “–disable-read-filter MappingQualityAvailableReadFilter” in the mutect2 calling method