What is Word Embedding

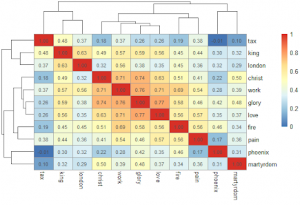

We applied Word2Vec and GloVe to create geometric representations of words. We evaluated semantic relationships across word vectors, using distance to calculate cosine similarity. For macro-analysis, we used GloVe algorithm. We believe as GloVe utilize enter corpus instead of just within the context window, it will produce better result semantically and syntactically for larger dictionary.

Limitations

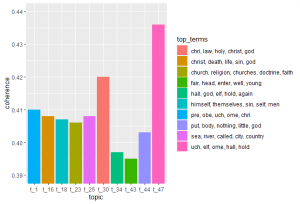

However, we soon discover that GloVe is very computationally expensive. Instead of 1000 iterations that will take 33 hours, we ran 5 iterations on the model. We have to make the trade-off between model performance and limited project time. Other limitations include our text missed information about the Genre, which is highly correlated with the model result.

Word Embedding Result

The phoenix, a metaphor used during reconstruction in London, is viewed with increasing political motives over pragmatic ones. A utopian society was initially intended to be built for the people, prioritizing pragmatic initiatives over symbolic ones. However, the purpose of reconstruction became more politically oriented with the approval of more costly projects.