Before we are able to begin analyzing our text, we first needed to make our text not only readable to us but also accessible for data analysis. Our process for cleaning was guided by the work done by the previous 2022 ECBC cohort and much of our code was inherited from them. All of our texts were downloaded from the Early Print Library Database, and they were downloaded in separate folders based on their time period. Overall, a total of around 52,000 texts were downloaded and later stored on the Duke Compute Cluster.

File Pre-Processing

To start, we cleaned all of our files using the amazing VEP Pipeline software created by students at the University of Wisconsin. This fixed any odd characters, gaps, or general weird format issues that were used in the raw files and made it generally easier to access and read. To be able to see all of the titles, dates, and authors of these texts, these files and folders were converted into a metadata CSV using a script written by the previous cohort. This way, we could filter the texts by date and choose only a specific section of the entire corpus. We have around 8,400 texts in our time period on Early Print.

Standardization and Lemmatization

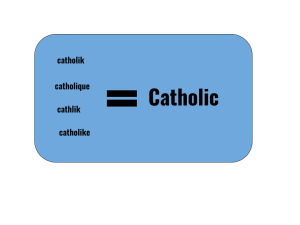

Since we are dealing with texts that are from 1590-1639, the language used during this time period not only varied in what people were writing to describe certain groups but also how they were spelling these descriptions. Early modern English is very different from the English we see today, and there was not a clear standard spelling for every kind of word: some words were spelt phonetically, or letters were used interchangeably. For example, it was very common to see ‘servant’ spelt ‘seruant’, as ‘u’ and ‘v’ were interchangeable. Another word example that was very frequent was ‘catholic’, which could be spelled ‘catholik’, ‘cathlik’, ‘catholique’, or ‘catholike’. These different variations made it not only difficult to read certain texts, but also difficult to search for certain words to track over time. Therefore, it was necessary to perform both standardization and lemmatization on our entire corpus.

Thankfully, the Early Print Library is already partially standardized and lemmatized, but further steps were needed to make sure that all of the words we were interested in tracking that may have been overlooked or specific word pairings (for example, the West Indies were standardized to west_indies in order to accurately track it’s occurrence) had a uniform, standard spelling. We created a standardization and lemmatization dictionary built off of our close readings of texts in our Virginia Sermon corpus. Of course, we could not get every single word in Early Print Library standardized, but we instead prioritized the words that we were interested in observing and their relation to Virginia as well as the different groups of people we built our sub-corpora around. Once these dictionaries were created, we ran our code that would replace certain words in our dictionaries with their corresponding standardized word. This made our data analysis significantly easier, as now significant words to our project that may have ten various spellings across the corpus would all show in one search, and would not skew our data.

Pre-Bag of Words

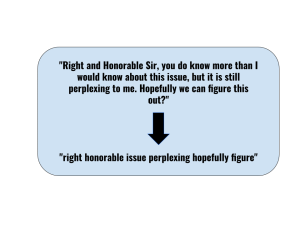

A text cleaning method we had to perform to make sure our analysis ran as smoothly as possible was Bag of Words, or BOW. Essentially, what BOW modeling does is strip the text of any unnecessary information that we are not interested in or may make it difficult to perform data analysis on the text and return a text file that is just a collection of the unique and important words in the text.

When we are looking for the frequency of certain words and their association with other terms in certain texts, we are not interested in examining words like ‘and’, ‘for’, or ‘but’. Words like these, as well as pronouns and prepositions, are what we would categorize as stop words, and to prepare for BOW we had to remove all instances where they occurred. Other items that had to be removed were punctuation and numbers. Everything in the text also had to be lowercase for uniformity throughout. Finally, with everything that is deemed unnecessary stripped from the text and word lowercase, data analysis was made much easier because we were able to focus on just the words we wanted to track.