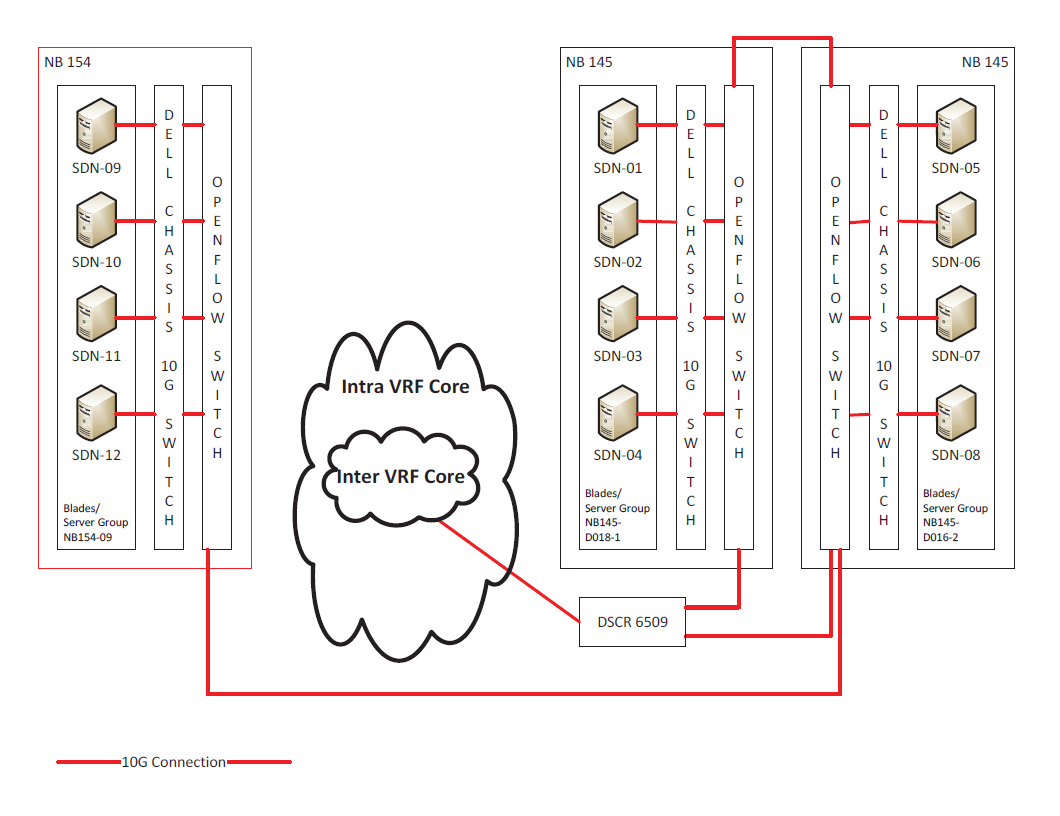

Test Environment Configuration

Three dell Blade chassis each containing 4 blades, a 4x10Gbps chassis switch, and a 1Gbps interface are connected via 3 SDN switches.

This may slightly change when we have relocated the equipment in NB145 into a single dedicated rack.

Bandwidth Performance Tests

perfSONAR will be used to generally test the performance of the switches

Bandwidth and latency tests will be run every 15 minutes

Two tests will be run with no overlap/competition for switch/server resources

Two tests will be run with overlap/competition for switch/server resources

Tests will be run for 24 hours and documented on sites.duke.edu/dukesdn – Excel spreadsheet to follow attached for Bandwidth – latency format TBD

File Transfer Load Tests (this may need some discussion)

We have two choices for this – use the test suite that John Pormann assembled or switch to a grid FTP based test

Option 1 – JP Test

A series of file transfers using various protocols will be initiated across the switch fabric. The results will indicate peak and average bandwidth available between the servers in the test environment.

Traffic levels on the switches will be monitored and reported via SNMP MIB polls running every 30 seconds (new – needs to be coordinated with SMOI group at OIT)

Results will be uploaded to the sites.duke.edu/dukesdn – (sample file here: SDN perfSONAR Rates #2

Option 2 – GridFTP Test

A series of file transfers using GridFTP will be initiated between source and destination servers across the switch fabric. The results will indicate peak and average bandwidth available between the servers in the test environment. Two sets of tests will be run:

Test 1 – Source Servers are SDN09-SDN12 and Destination Servers are SDN05-SDN08 (1 hop)

Test 2 – Source Servers are SDN09-SDN12 and Destination Servers are SDN01-SDN04 (2 hops)

Traffic levels on the switches will be monitored and reported via SNMP MIB polls running every 30 seconds (new – needs to be coordinated with SMOI group at OIT)

Results will be uploaded to the sites.duke.edu/dukesdn (sample results file attached above)

Rule Set Tests

A tool such as oFlops will be used to stress the introduction of new rules and/or updates to existing rules.

It is expected that the switch will fall over at some point or that an operational limit will be reached

What will we report – # of rules/sec, # rules, # updates/sec, … ?

Should we also be putting traffic against the switch at the same time – a file transfer/gridFTP test or … ?

Traffic, CPU levels, … will be monitored and reported via SNMP MIB polls running every 30 seconds

Are there “rule complexity” tests we want to run – # of simple rules, # of complex rules, …

Results will be uploaded to the sites.duke.edu/dukesdn (format to be decided at later date)

SDN1.0 Completeness Score

The OpenFlow1.0 implementation by the vendor will be evaluated for services determined to be important by the Duke community (Victor/Jeff/…).

Goal to confirm that the vendor implemented the MUST haves and we look for any MAY haves that we think are important

What else should we do and WHAT HAVE I MISSED?