The monitoring server watches the network loads and CPU loads during several different sets of conditions, ranging from no load to saturation of the network with both data packets and OpenFlow rules.

Hardware:

- Dell chassis named A, B, and C, and blades named sdn-A1, A2, A3, B1, B3, C1, C2, and C3

- 10G data ports from these blades to 10G Dell internal switches

- Fibre from the 10G Dell switch to a GBIC connector

- 10G OpenFlow NEC switches, connected in a variety of ways to one another and the 10G Dell internal switches via the 10G GBIC connector

- Monitoring server in chassis C named sdn-C4 which can see all 3 NEC switches and do SNMP walks on them

- 3650 Cisco switch connected to all of the NEC switches, as well as to sdn-C4

Software

- GridFTP for transferring files

- SNMPwalk for polling the hardware

- Shell scripts (in bash) for controlling the GridFTP and SNMP processes over multiple servers

- Tmux multi-session emulator which can be used to send the same command to multiple ssh sessions almost simultaneously

- Screen (or tmux) to leave processes running and detach from them

- Floodlight and POX to send OpenFlow rules to the NEC switches

- Python to script OpenFlow rules

- Excel for graphing data

Preliminary Discovery

- A 1G file (1024*1024*1024= 1073741824) was sent from sdn-B1 to sdn-C1 forty times to confirm the SNMP counter increment.

- The start time for the 40 pushes was 14:36:44

Method

- On each of the data blades (sdn-A1 through sdn-C3) set up files 8Gb in size (1024*1024*1024*8 bits = ). These are filled with the number 1, over and over.

- Using GridFTP, set up a method for transferring the files over the 10G network easily without filling up the destination hard drive (gftp_send_files-var.sh).

- Also set up a method for sending two or more instances of this file to and from the same machines simultaneously (for example, from sdn-A1 to sdn-B1 and sdn-A1 to sdn-B1) via GridFTP from different ssh sessions via tmux or cron jobs.

- A sample tmux command-line instruction such as this would ensure that all monitoring in windows 5 and 7 began simultaneously, for example: for line in 5 7 ;do tmux select-window -t $line;tmux send-keys “sh code/snmp_code/watch_port.sh 3 18$line”;done;tmux select-window -t 0

- Capture the send data from the server’s point of view using the ‘time’ command. (also gftp_send_files-var.sh).

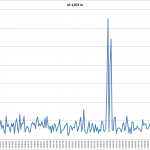

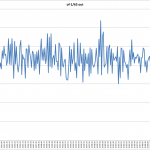

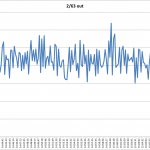

- Write a script to use the SNMPwalk protocol to record the difference on the octet counters, called IfHCOctetIn and IfHCOctetOut, on the switch ports while the GridFTP sends are running, with timestamps and a 10-second sampling rate. The SNMP MIBs are by port identifier, which is not the same as port number. “In” refers to the data coming in to the switch through that port, whereas “Out” refers to the data leaving the switch by that port (watch_port.sh).

- While the GridFTP sends are running, sets of OpenFlow rules are pushed to the server, starting at a 100 rules per second test and increasing in the next sets to 300 and then to 700 rules per second. Mark the start and stop times of the tests (pox.py).

- Use Excel formulae to calculate the difference in the SNMP counters, keeping in mind the idea that the counters “roll” (reset to zero) about once per day.

- The counters are recording the number of bytes (8 bits) that have gone by since the last recorded counter instance, up to 2^64, such that a counter reading of 478041734 would mean a total of 3824333872 bits had gone through that port since the last roll-over of the counter (to 0).

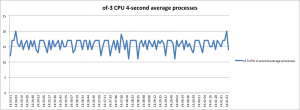

- Use another script to SNMPwalk the CPU load average while the send tests are running.

Controls

- Source and destination nodes (sdn-A1 through sdn-C3) across one, two, or three switches – even within the same chassis packets are sent out to the external switch and back in again. This means that packets sent from A1 to A2 are sent across one switch, from A1 to B1 across two, and A1 to C1 can be across two or three switches depending on the rules set in OpenFlow.

- Size of file sent (8Gb)

- Number of files sent from the same server at the same time (1-?)

- Number of sends going through a given switch at a time (1-?)

- Number of rules pushed to the switches (0-700)

Data Interpretation

- In Excel, each network throughput counter reading is compared with the one previous to it, to calculate the difference between them. Care must be taken to make sure that the “roll-over” is checked for, leading to an if-then formula in Excel:

=IF(port!B2<port!B1,((2^64)-(((2^64)-port!B1)+port!B2)),port!B2-port!B1)

meaning that if a number is smaller than the one before it, the difference between it and the end of the counter (2^64) is subtracted from the difference between the prior number and the beginning of the counter (0). Otherwise, the prior number is simply subtracted from the current number.

Over time, this result can be plotted to show a distinct curve as data throughput is increased or decreased.

- While these data are processed, it is crucial to note the start times for pushing the testing files (4G or 8G) and also the start times and end times for pushing OpenFlow rules.

- The CPU load average numbers can be plotted in Excel.

Scripts

Watch_port.sh

#!/bin/bash

# Need switch (2,3, or 4) and port # - 145-195

switch=$1

port=$2

if [ -z "$switch" ]

then

echo "Need switch by IP (2, 3, or 4)"

exit

fi

if [ -z "$port" ]

then

echo "Need port OID (145-195)"

exit

fi

echo "This loop will run until you ctrl-c it."

while [ "$switch" > 1 ]

do

secs=`date | cut -d ' ' -f 5`

walkout=`snmpwalk -c openflow -v1 -On 10.138.97.$switch ifHCOutOctets.$port | cut -d. -f 13 | cut -d ' ' -f 4`

compositeout="$secs,$walkout"

# echo $compositeout

echo $compositeout >> /home/bryn/data/R$switch-P$port.switch.ifHCOutOctets.nec.csv

walkin=`snmpwalk -c openflow -v1 -On 10.138.97.$switch ifHCInOctets.$port | cut -d. -f 13 | cut -d ' ' -f 4`

compositein="$secs,$walkin"

# echo $compositein

echo $compositein >> /home/bryn/data/R$switch-P$port.switch.ifHCInOctets.nec.csv

secs=''

walkout=''

compositeout=''

compositein=''

sleep 10

done

gftp_send_files-var.sh

#!/bin/bash

# Puts the given file on each of the 9 machines via the SDN network, and writes the output to /home/bryn/log/$hostname-gridftp-$size-$today.out.

# It sits in crontab and will run every hour on the hour or whatever test timing seems appropriate.

hostname=`hostname -s`

size=$1;

line=$2;

instance=$3;

if [ -z "$size" ]

then

echo "Need size (4G or 8G) "

exit

fi

if [ -z "$line" ]

then

echo "Need target (A1, A2, A3, B1, B2, B3, C1, C2, C3) "

exit

fi

if [ -z "$instance" ]

then

echo "instance ? of ? (i.e. 2 of 3)?"

exit

fi

start=''

stop=''

filename="/home/bryn/log/$hostname-gridftp-$size-$instance.out"

echo >> $filename

echo $filename

echo $line-10g

echo

echo >> $filename

echo To: sdn-$line-10g >> $filename

start=`date +%T`

echo Start: $start >> $filename

/usr/bin/time -v -ao$filename /usr/bin/globus-url-copy -v file:///home/bryn/data/$hostname-$size.txt sshftp://sdn-$line-10g/ramdisk/$hostname-$size-$instance-received.txt

echo /usr/bin/time -v -ao$filename /usr/bin/globus-url-copy -v file:///home/bryn/data/$hostname-$size.txt sshftp://sdn-$line-10g/ramdisk/$hostname-$size-$instance-received.txt

stop=`date +%T`

echo Stop: $stop >> $filename

echo >> $filename

#### WARNING - this will fill up / very quickly, do something!

ssh sdn-$line-10g rm /ramdisk/$hostname-$size-$instance-received.txt

pox.py

#!/bin/sh -

# Copyright 2011-2012 James McCauley

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at:

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# If you have PyPy 1.6+ in a directory called pypy alongside pox.py, we

# use it.

# Otherwise, we try to use a Python interpreter called python2.7, which

# is a good idea if you're using Python from MacPorts, for example.

# We fall back to just "python" and hope that works.

''''true

#export OPT="-u -O"

export OPT="-u"

export FLG=""

if [ "$(basename $0)" = "debug-pox.py" ]; then

export OPT=""

export FLG="--debug"

fi

if [ -x pypy/bin/pypy ]; then

exec pypy/bin/pypy $OPT "$0" $FLG "$@"

fi

if type python2.7 > /dev/null 2> /dev/null; then

exec python2.7 $OPT "$0" $FLG "$@"

fi

exec python $OPT "$0" $FLG "$@"

'''

from pox.boot import boot

if __name__ == '__main__':

boot()