Jianan Wang ran a series of perfSONAR runs that included runs that overlapped their timing and runs that were done with no overlap.

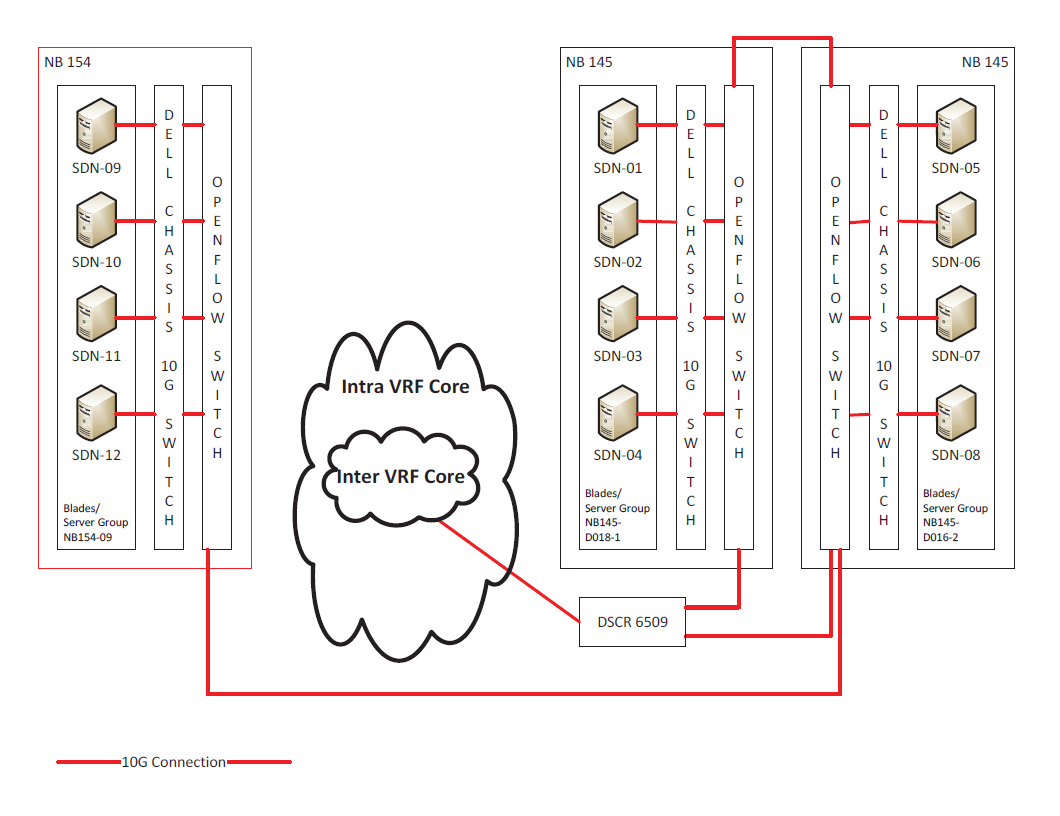

The three blade chassis, each containing four blade servers, are connected to three independent OpenFlow switches.

Connections between blades SDN09/SDN10/SDN11/SDN12 to SDN05/SDN06/SDN07/SDN08 traverse two OpenFlow switches

Connections between blades SDN09/SDN10/SDN11/SDN12 to SDN01/SDN02/SDN03/SDN04 traverse three OpenFlow switches

As expected, the runs with no overlap showed approximately 9 Gbps of available bandwidth for traffic that ran through two switches, while the runs that overlapped (across three servers) showed approximately 3 Gbps of available bandwidth

A reduction of 1 Gbps was seen in traffic that flowed across the additional switch for the unblocked test.

As a reminder, here is an overview of the server topology

Here is a shorter time window that shows the variation between blocking and non-blocking perfSONAR tests (note that this is for SDN10 connections to SDN01 and SDN05).