Unearthing A New Way Of Studying Biology

Yes, students, worms will be on the test.

Eric Hastie, a post-doctoral researcher in the David Sherwood Lab, has designed a hands-on course for undergraduates at Duke University in which biology students get to genetically modify worms. Hastie calls the course a C.U.R.E. — a course-based undergraduate experience. The proposed course is designed as a hands-on, semester-long exploration of molecular biology and CRISPR genome editing.

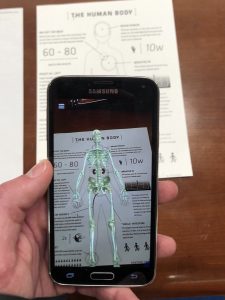

In the course, the students will learn the science behind genome editing before getting to actually try it themselves. Ideally, at the course’s end, each student will have modified the genome of the C. elegans worm species in some way. Over the course of the semester, they will isolate a specific gene within one of these worms by tagging it with a colored marker. Then they will be able to trace the inserted marker in the offspring of the worm by observing it through a microscope, allowing for clear imaging and observation of the chosen characteristic.

When taught, the course will be the third in the nation of its kind, offering undergraduates an interactive and impactful research experience. Hastie designed the course with the intention of giving students transferrable skills, even if they choose careers or future coursework outside of research.

“For students who may not be considering a future in research, this proposed class provides an experience where they can explore, question, test, and learn without the pressures of joining a faculty research lab,” he told me.

Why worms? Perhaps not an age-old question, but one that piqued my interest all the same. According to Hastie, worms and undergraduate scientific research pair particularly well: worms are cost-effective, readily available, take up little space (the adults only grow to be 1mm long!), and boast effortless upkeep. Even among worms, the C. elegans species makes a particularly strong case for its use. They are clear, giving them a ‘leg up’ on some of their nematode colleagues—transparency allows for easy visibility of the inserted colored markers under a microscope. Additionally, because the markers inserted into the parent worm will only be visible in its offspring, C. elegans’ hermaphroditic reproductive cycle is also essential to the success of the class curricula.

“It’s hard to say what will eventually come of our current research into C. elegans, but that’s honestly what makes science exciting,” says undergraduate researcher David Chen, who works alongside Hastie. “Maybe through our understanding of how certain proteins degrade over time in aging worms, we can better understand aging in humans and how we can live longer, healthier lives.”

The kind of research Hastie’s class proposes has the potential to impact research into the human genome. Human biology and that of the transparent, microscopic worms have more in common than you might think— the results derived from the use of worms such as C. elegans in pharmaceutical trials are often shown to be applicable to humans. Already, some students working with Hastie have received requests from other labs at other universities to test their flagged worms. So perhaps, with the help of Hastie’s class, these students can alter the course of science.

“I certainly contribute to science with my work in the lab,” said junior Ryan Sellers, a research contributor. “Whether it’s investigating a gene involved in a specific cancer pathway or helping shape Dr. Hastie’s future course, I am adding to the collective body of knowledge known as science.”

Post by Rebecca Williamson